In order to work as intended, this site stores cookies on your device. Accepting improves our site and provides you with personalized service.

Click here to learn more

Click here to learn more

User Acceptance Testing (UAT) is one of the latter stages of a software implementation project. UAT fits in the project timeline between the completion of configuration / customisation of the system and go live. Within a regulated lab or clinical setting UAT can be informal testing prior to validation, or more often forms the Performance Qualification (PQ).

Whether UAT is performed in a non-regulated or regulated environment it is important to note that UAT exists to ensure that business processes are correctly reflected within the software. In short, does the new software function correctly for your ways of working?

You would never go into any project without clear objectives, and software implementations are no exception. It is important to understand exactly how you need software workflows and processes to operate.

To clarify your needs, it is essential to have a set of requirements outlining the intended outcomes of the processes. How do you want each workflow to perform? How will you use this system? What functionality do you need and how do you need the results presented? These are all questions that must be considered before going ahead with a software implementation project.

Creating detailed requirements will highlight areas of the business processes that will need to be tested within the software by the team leading the User Acceptance Testing.

Requirements, like the applications they describe, have a lifecycle and they are normally defined early in the purchase phase of a project. These ‘pre-purchase’ requirements will be product independent and will evolve multiple times as the application is selected, and implementation decisions are made.

While it is good practice to constantly revise the requirements list as the project proceeds, it is often the case that they are not well maintained. This can be due to a variety of reasons, but regardless of the reason you should ensure the system requirements are up to date before designing your plan for UAT.

A common mistake for inexperienced testing teams is to test too many items or outcomes. It may seem like a good idea to test as much as possible, but this invariably means all requirements from critical to the inconsequential are tested to the same low level.

Requirements are often prioritised during product selection and implementation phases according to MoSCoW analysis. This divides requirements into Must-have, Should-have, Could-have and Wont-have and is a great tool for assessing requirements in these earlier phases.

During the UAT phase these classifications are less useful, for example there may be requirements for a complex calculation within a LIMS, ELN or ePRO system. These calculations may be classified as ‘Could-have’ or low priority because there are other options to perform the calculations outside of the system. However, if these calculations are added to the system during implementation, they are most likely, due to their complexity, a high priority for testing.

To avoid this the requirements, or more precisely their priorities, need to be re-assessed as part of the initial UAT phase.

A simple but effective way to set priority is to assess each requirement against the risk criteria and assign a testing score. The following criteria are often used together to assess risk:

Once the priority of the requirements has been classified the UAT team can then agree how to address the requirements in each category.

A low score could mean the requirement is not tested or included in a simple checklist.

A medium score could mean the requirement is included in a test script with several requirements.

A high score could mean the requirement is the subject of a dedicated test script.

A key question often asked of our team is how many test scripts will be needed and in what order should they be executed? These questions can be answered by creating a Critical Test Plan (CTP). The CTP approach requires that you first rise above the requirements and identify the key business workflows you are replicating in the system. For a LIMS system these would include:

Sample creation, Sample Receipt, Sample Prep, Testing, Result Review, Approval and Final Reporting.

Next the test titles required for each key workflow are added in a logical order to a CTP diagram, which assists in clarifying the relationship between each test. The CTP is also a great tool to communicate the planned testing and helps to visualise any workflows that may have been overlooked.

Now that the test titles have been decided upon, requirements can be assigned to a test title and we are ready to start authoring the scripts.

There are several different approaches to test script formats. These range from simple checklists, ‘objective based’ where an overview of the areas to test are given but not the specifics of how to test, to very prescriptive step by step instruction-based scripts.

When testing a system within the regulated space you generally have little choice but to use the step by step approach.

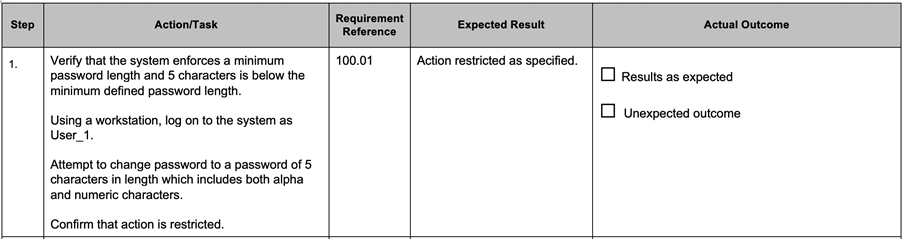

Test scripts containing step by step instruction should have a number of elements for each step:

A typical example is given below.

However, when using the step by step format for test scripts, there are still pragmatic steps that can be taken to ensure efficient testing.

Data Setup – Often it is necessary to create system objects to test within a script. In an ELN this could be an experiment, reagent or instrument, or in ePRO a subject or site. If you are not directly testing the creation of these system objects in the test script, their creation should be detailed in a separate data setup section outside of the ‘step by step’ instructions. Besides saving time during script writing, any mistakes made in the data setup will not be classified as script errors and can be quickly corrected without impacting test execution.

Low Risk Requirements – If you have decided to test low risk requirements then consider the most appropriate way to demonstrate that they are functioning correctly. A method we have used successfully is to add low risk requirements to a table outside of the step by step instructions. The table acts as a checklist with script executers marking each requirement that they see working correctly during executing the main body of step by step instructions. This avoids adding the low requirements into the main body of the test script but still ensures they are tested.

Test Script Length – A common mistake made during script writing is to make them too long. If a step fails while executing a script, one of the resulting actions could be to re-run the script. This is onerous enough when you are on page 14 of a 15 page script. However, this is significantly more time-consuming if you are on page 99 out of 100. While there is no hard and fast rule on number of steps or pages to have within a script, it is best to keep them to a reasonable length. An alternative way to deal with longer scripts is to separate them into sections which allows the option of restarting the current block of instructions within a script, instead of the whole script.

An important task when co-ordinating UAT is be fully transparent about which requirements are to be tested and in which scripts. We recommend adding this detail against each requirement in the User Requirements Specification (URS). This appended URS is often referred to as a Requirements Trace Matrix. For additional clarity we normally add a section to each test script that details all the requirements tested in the script as well as adding the individual requirement identifiers to the steps in the scripts that test them.

UAT is an essential phase in implementing new software, and for inexperienced users it can become time-consuming and difficult to progress. However, following the above steps from our team of experts will assist in authoring appropriate test scripts and leading to the overall success of a UAT project. In a future blog we will look at dry running scripts and formal test execution, so keep an eye on our Opinion page for further updates.